What happens when careful, scientific commentary is quietly erased by a platform’s automated filters? On August 26, 2025, I experienced this firsthand on Elon Musk’s X — in what might seem like an academically unexciting series of three subsequent comments about food science, but which reveal X’s insidious thought censorship.

The Incident

On Aug 26, 2025, I posted commentary about cheese — a complex emulsion of fat and water stabilized by phospholipids and casein micelles. Cheese acts as a natural SEDDS (Self-Emulsifying Drug Delivery System), enhancing bioavailability of lipophilic phytochemicals like curcumin, bixin, crocetin, safranal, gingerols and shogaols, thymol and carvacrol, astaxanthin, and more.

A few of these compounds, bixin and norbixin, which come from annatto, are used to color cheese. And here is where our food-science story takes an interesting turn.

In the U.S., most whey protein is made from Cheddar cheese whey colored with annatto. Annatto’s natural carotenoids bixin and norbixin bind to the milk protein, turning it and the cheese yellow. As part of the production process, whey is produced and sold to whey protein powder manufacturers. The whey protein powder manufacturers, faced with this coloration, consider it a problem, and have sought to remove these offending pigments to make the powder white, healthful benefits be damned!

They could market it:

“Our whey is enriched with naturally occurring carotenoids from annatto — supporting vision and cognitive health.”

— but instead, they try to remove the color to produce a white powder.

A study published in the Journal of Dairy Science, https://www.journalofdairyscience.org/article/S0022-0302(14)00242-2/fulltext, supports this process and its implications.

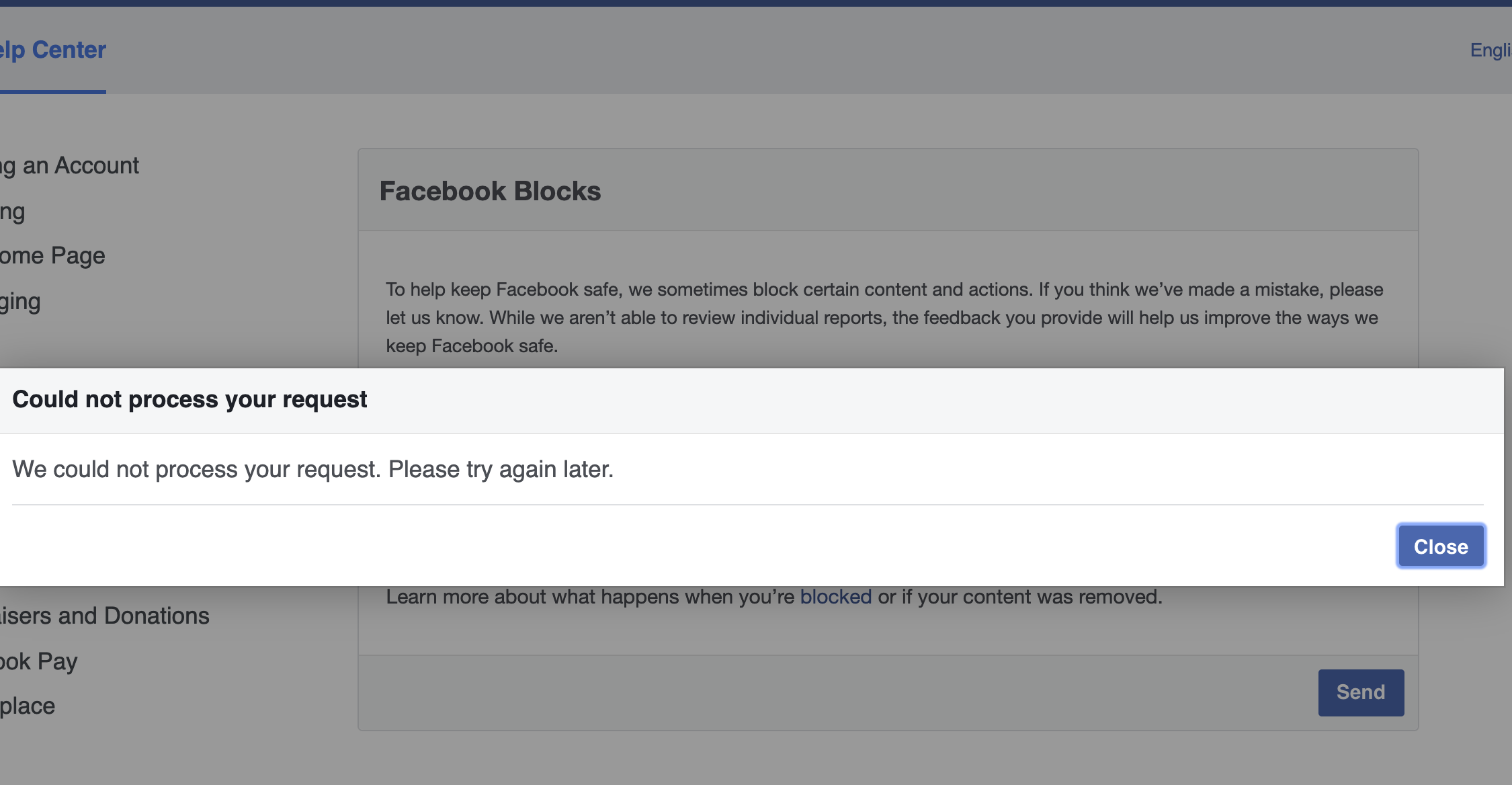

But when I posted these scientifically grounded facts on X, the platform’s automated system erased my comments without explanation. It was deleted and not just hidden from view, because I downloaded my full archive, and it was missing there, too.

My comment wasn’t profane, political, or wrong. It was thoughtful, scientific, and correct, but it was deleted, silently, without explanation. This wasn’t political censorship, but something subtler: algorithmic sanitation. A form of pattern-mismatch suppression that filters out novelty and penalizes nuance using crude heuristics — a pragmatic approach not optimized, perfected, or rationalized, but “good enough” to do what is wanted.

Algorithmic Rule Enforcement

Algorithmic rule enforcement is used to control information exchange, applying automated filters to enforce conformity. This includes detecting behaviors that resemble spam or fake engagement. It can manifest as quiet removals, shadowbans, or deprioritization.

Rules may not be disclosed and can change at any time without notice. Enforcement may occur without warning or explanation, without indication of what rule was triggered or how it applied, what exactly the consequences are, or for how long it will apply. The platform’s filtering algorithms determine informational reach and scope: what gets delivered or buried, and to whom, directly or through association.

While policy appears liberal and enforcement light, users feel confident expressing themselves, trusting a platform associated with free speech advocacy. But algorithmic enforcement can change at any time, without prior notice. And with X’s dominance via network effect, those shifts don’t just affect individual users, they shape social discourse.

The effect shapes habits, opinions, and discourse at scale, resembling Bernays’ “conscious and intelligent manipulation”. However, unlike Bernays’ carefully orchestrated campaigns, today’s algorithmic enforcement is mechanical, heuristic-driven, and may be incidental — emergent rather than intentional from the outset. But unlike Bernays’ model, it’s subject to change at any time, without prior notice — making it less predictable, less accountable, and arguably more insidious.

Bernays emphasized subtle, indirect influence to shape perception and behavior without the subject realizing they’re being guided. In the case of X, no warning is given, no clear rule is cited, and the deletion happens silently. The result? Users self-censor, adapt their tone, or withdraw entirely — not because of overt coercion, but because of invisible nudges.

Pattern-Mismatch Suppression

Pattern-mismatch suppression form of algorithmic filtering that quietly removes content not because it’s false or harmful, but because it doesn’t match the statistical or stylistic expectations of the system. It’s not rule-based moderation — it’s heuristic pattern policing, where novelty, nuance, and off-template expression are treated as noise.

You never know what triggered it. The rules aren’t disclosed. There’s no explanation, no appeal — just absence.

Algorithms silently penalize content that fails to match vague, unspoken rules. This crudely trains the mind to repress original thought by associating it with erasure, irrelevance, and isolation. Eventually, they don’t have to delete your ideas — they’ve already shaped your individual thought patterns.

The Investigation: Forensic Analysis of Digital Erasure

This wasn’t flagged as hate speech or misinformation. It wasn’t political, profane, or rude — and that’s not to say censoring political or so-called “hateful” speech is justified, only that this wasn’t that. It was correct, quiet, and useful. And the system still removed it.

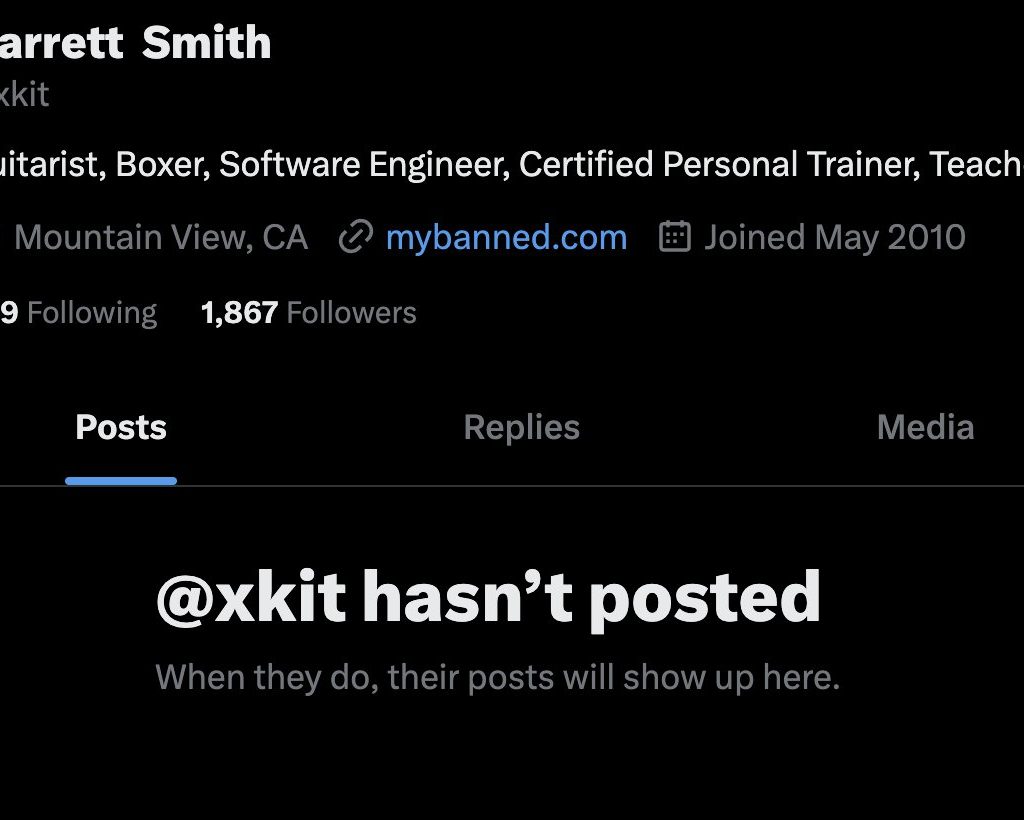

Noticing that, I logged out to check what others could see. My timeline was gone — replaced with the lie: “@xkit hasn’t posted yet.”

To verify what happened, I downloaded my X archive.

My missing replies (about cheese, phytochemicals, whey, etc.) were also missing from the archive. They hadn’t just been hidden, but quietly deleted by the system, without notice. The archive confirmed what I suspected: this wasn’t merely shadow suppression, it was full removal — thought erasure and profile revision. Silent, automated, and unaccountable.

Yet no notice from the ‘free speech’ platform.

The Stakes: What We Lose When Nuance Dies

If you don’t know it’s happening, you won’t appeal. And that’s the point — it reduces support load. Unlike my comments, their automated system isn’t designed to foster discourse; it’s designed for control, penalizing normative deviance.

Automated erasure may apply to any deviation from the “normal range” of acceptable thought, even if that deviation is scientific, quiet, and useful. The platform’s implicit policy could be summed up as: social conformity curation: “Make it shorter, simpler, and like everything else,” an insidious policy whose mechanisms are revealed through blind spots (the inadvertent censoring of food science comments).

The Broader Pattern

Thinking carefully, sharing knowledge, elevating the conversation? The automaton determines that’s out of line.

X isn’t merely a censorship platform, it’s a social curation tool. Their bot deleted my insightful scientific facts about cheese, whey, and phytochemicals, then shadowbanned me with no notice. Thoughtful, precise, scientific? Pfft. No! The algorithm has no category for that. It can’t tell the difference between an insight and a scam unless it’s already been validated by prior mass engagement, so, it buries it. It’s not that X objected to what I said. It didn’t even read it. Their automaton bot saw pattern mismatch and dropped me from view.

Cognitive Conditioning

There’s a bleak irony here, like Idiocracy’s “Not Sure” nametag — a system so crude it acts on surface inputs without comprehension. Both reduce identity and intent to pattern compliance. Fall outside expected templates, and the system doesn’t ask questions — it just proceeds, blind to meaning, a crude contrast to human complexity.

Platforms like X exemplify this dynamic. Algorithmic rule enforcement is convenient but arbitrary, especially when rules are hidden. When enforcement is lax, users feel a false sense of safety under an illusion of trust in a faceless system. But when a new or previously unknown policy is suddenly enforced, it comes as a surprise — one likely to repeat, thanks to the network effects platforms like X enjoy.

Their system lacks interpretive capacity. It can’t assess meaning or intent — only patterns, engagement, and ironically bad “trust” heuristics (look at Grok as an example of X’s capabilities). It’s designed not to disagree with you, but to quietly erase you by default when you fall outside the template. If your signal doesn’t match the shape of existing noise, it gets thoughtlessly filtered out; your thoughtfulness punished by default. It isn’t even malicious, just lazy. This isn’t just unintelligent, it’s anti-intelligent.